I'll admit it, I've got a weak spot for programming competitions. One year I scheduled my vacations so that I would have a full free week for all of the official Perl Golf tournaments scheduled that summer. (It was worth it too, I won one of them).

Even so, I wasn't going to enter the Netflix Prize, no matter how cool it sounds. That much money at stake means that it's almost certainly going to be dominated by teams of people with real understanding of the problem domain, probably PhDs in statistics, and more computing resources than a single desktop PC. And while winning might not be the point, I want to at least have a good shot at getting a respectable result.

But after reading the ongoing comp.lang.lisp thread about the competition ("Subject: massive data analysis with lisp"), and disagreeing with most of what was written there, I needed to validate my opinions on how the data should be represented internally, by writing at least the data-handling parts of the program.

-

In-memory database, which each rating stored as a separate structure instance? That's going to take gigabytes of memory due to the headers and the inefficient struct slot packing.

-

Using an SQL database? That seems like a horrible idea, unless you intend to do all of your processing using SQL functions. Once you want to do anything even moderately complex on the lisp-side, you'll need to transfer the data over, and get buried in the overhead.

-

Storing the data as flat files, and using READ for reading them? AARGH!

My gut feeling – supported by complete lack of knowledge of the problem domain – is that there's simply the wrong amount of data for any of these to be feasible, compared to an efficient in-memory representation.

The plan was to pack each datapoint into a x86-64 fixnum (22 bits for the user info, 15 for the movie, and 3 for the rating, lots of padding), write the fixnums into a file with a suitable SBCL array header as a prefix, and then mmap the file. This has several nice properties: it loads instantly, can be accessed at memory speed, is reasonably memory-efficient, doesn't stress the GC, has good paging characteristics (on-demand, copy-on-write), and looks like a normal Lisp vector. mmap two copies with the entries sorted in a different order, and you can also do easy and quick searches and joins bon both movies and uids.

On the minus side, this does take 2*800MB of memory and disk space. Luckily that's just right for my machine, so the program will still run without any disk trashing (the whole working set won't really fit into memory at once, but the accesses have a pretty good locality).

The exact specifics of the data layout are obviously not crucial, just something that works for me. Somebody else might make different tradeoffs, like omitting the movie from the rating, and inferring it from the array index to get it to fit into less memory / 29-bit fixnums. Or you might store the different properties (movie / uid / rating) in separate arrays, to get better cache / page locality.

Initially this was all that I was going to do. But once the the data was available, I couldn't resist playing around with it a little. Pretty soon I had a working implementation of an unsophisticated brute-force algorithm, which I expected would be barely better than the naive approach of just predicting averages. But actually it ended up getting a slightly better score on the probe dataset than the current Cinematch algorithm does. The most likely reason was that I didn't bother separating the probe set from the training data, which would skew the results.

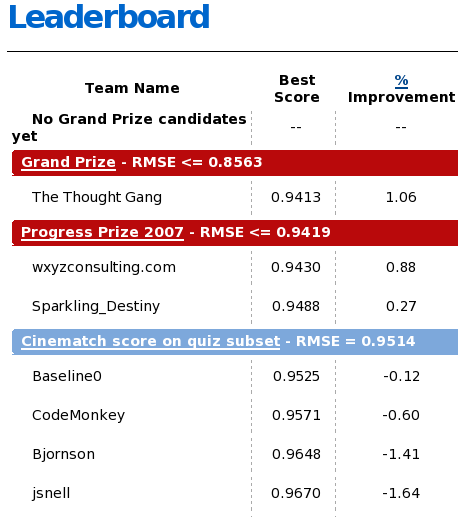

So I did the qualifying dataset too, and submitted it for scoring. As expected the score on that one is worse, but it's not nearly as bad as I feared. Rather it's 7th on the leaderboard, a mere 1.6% behind Cinematch. I'm fairly convinced that if I'd been using any other sort of data representation, I would've been forced to abandon this algorithm as too slow. Even as-is, it'll take several hours to process the full qualifying set.

There are a few morals to this story:

-

It's not very hard to get reasonably good results for this task (I spent about 10 hours coding, starting from scratch). And I've forgotten the little I learned in the intro to statistics course, and never knew anything about implementing databases in the first place. So if the problem looks interesting, but you don't think you're qualified... Just give it a shot.

-

Lisp is an excellent tool for these kinds of problems. Interactive image-based development and a compiler with reasonable performance is a great combination. Especially if you dig into the implementation-specific features for the exotic operations, instead of requiring absolute portability. I would still be coding the first version in C++, I probably wouldn't have even imported the data efficiently into Java, and my heart is bleeding for the poor souls who are apparently trying to use PHP and MySQL for this.

-

Don't submit your first result ;-) Now I have to wait for a week to see whether the simple changes that improve the probe result by about 0.015 RMSE will also work properly on the qualifying set.

Ouch.

Can you expand on the movie/uid/rating in separate arrays bit? I'm not sure I understood how that would be implemented. You still got to connect them somehow, wouldn't that increase memory use a lot? (also, inferring movieid seems difficult? You've got to keep which movies a user have rated stored somewhere.)

Searching cll for memory mapping files after reading this post, I found that not everyone agrees that mmap is the best/only solution (http://groups-beta.google.com/group/comp.lang.lisp/msg/aa476e9de0746eeb) but keeping the data compact/seekable to avoid reading from disk unneccessarily is obviously a good Idea.

Keep it up, you're down to 11th. :-)